Storing models with Polaris-Studio#

The anatomy of a Polaris model repository (or a stored Polaris model) differs from the Anatomy of a Polaris model mainly in

the format the data is stored in. As it is desirable to version-control model inputs to track changes and document the

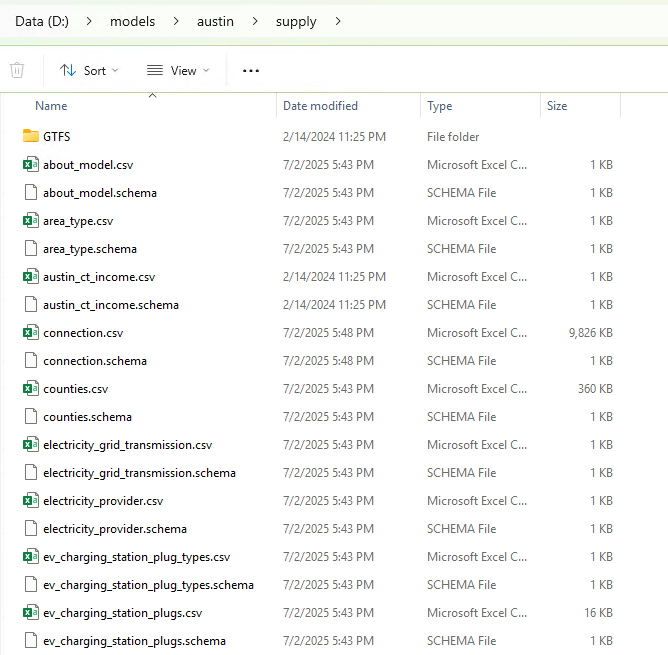

model evolution, data that is stored in SQLite databases when operating POLARIS, are instead stored in CSV files, a diff-able format, within a sub-directory (supply for the Supply.sqlite, demand for the Demand.sqlite, etc).

Polaris-Studio has long provided a solution for dumping SQLite databases to CSV, where the schema and data of each

table are exported to a CSV in *.schema file and a CSV in *.csv file, respectively. In the case of geometry columns, the

geometry is converted to Well-Known-Text (WKT) format, and the table SRIDs are stored in a separate file called

srids.csv. An example folder structure/contents is shown below:

The data dumping process, most often used with the supply database, is a simple procedure that can be performed with a single line of code, as shown below, and is stored in the supply folder inside the model root.

from polaris.network.network import Network

net = Network.from_file("path/to/supply-model.sqlite", run_consistency=False)

net.ie.dump(net.path_to_file.parent / "supply", extension="csv") # "parquet" is also supported

The dumping procedure also accepts the “parquet” extension, which is a more efficient format for storing data, but not diff-able. It is important to note that Polaris-Studio is smart enough to detect the format of existing files when dumping database tables, and will switch the format of the dumped files to match the existing files in the folder.

In our experience, it is sensible to store tables in a mix of formats, keeping smaller tables and those we would expect users to be editing themselves (e.g. links/nodes, ev chargers, zones, etc.) in CSV format, and storing very large tables and those created by automated procedures (Connection,Location_Links, signal-related tables, transit-related tables, etc.) in parquet, relying on testing procedures instead of manual review of diffs during merge request reviews.

Testing POLARIS models in Git repositories#

When importing models in Git repositories, it may be difficult to analyze the git diff for some of POLARIS largest tables. For those cases, Polaris-Studio offers tools to analyze and compare tables in a model repository, providing the user with a summary of differences, as shown below.

With this testing, we can compare supply and freight tables between two branches (e.g. the current main branch and a new feature branch), as well as exogenous trip and population data, for which we generate visual reports.

from pathlib import Path

import filecmp

import json

from polaris.utils.testing.model_comparison.compare_dbs import compare_supply_tables

from polaris.utils.testing.model_comparison.compare_trip_files import compare_exogenous_trips

from polaris.utils.testing.model_comparison.compare_populations import compare_populations

report = ["SUPPLY TABLES: \n\n"]

report.extend(compare_supply_tables(Path(__file__).parent.parent.parent, "/tmp/new_branch"))

report.append("FREIGHT TABLES: \n\n")

report.extend(compare_freight_tables(Path(__file__).parent.parent.parent, "/tmp/new_branch"))

with open(Path(__file__).parent.parent.parent / "model_diff.json", "w") as f:

f.write(json.dumps({"body": "\n".join(report)}))

# Compare all exogenous demand files and save images

comparisons = compare_exogenous_trips(Path(__file__).parent.parent.parent, Path("/tmp/new_branch"))

Path("/tmp/image_report").mkdir(parents=True, exist_ok=True)

for name, fig in comparisons.items():

fig.savefig(f"/tmp/image_report/{name}.png", dpi=400)

# Compare all exogenous demand files and save images

comparisons = compare_populations(Path(__file__).parent.parent.parent, Path("/tmp/new_branch"))

Path("/tmp/image_report").mkdir(parents=True, exist_ok=True)

for name, fig in comparisons.items():

assert (f"/tmp/image_report/{name}.png").exists() is False

fig.savefig(f"/tmp/image_report/{name}.png", dpi=400)

At ANL, we use this tool in our CI system to return the json report as a message in the merge request, which we find to be very useful when reviewing proposed changes to models.

In our CI systems we also perform a bare-minimum testing that consists of building the model and running a set of tests for critical model checks, which has also shown to be very useful in reducing the number of problematic changes commited to our model repositories.

from polaris.utils.testing.build_model_run_critical_tests import critical_network_tests

critical_network_tests("Austin", ".", "/tmp/model_build")

Rebuilding models from Git repositories#

When models are stored in git repositories, Polaris-Studio provides a simple way to rebuild the model into the databases

from polaris.project.polaris import Polaris

project = Polaris.build_from_git(model_dir="/tmp/grid_from_git", city="Grid", inplace=True, overwrite=True, scenario_name=None)

Rebuilding models from repositories on disk#

When models from git repositories (or at least structured as one) are available on disk, and supply tables are in their dumped formats inside the supply folder, Polaris-Studio provides a simple way to rebuild the model into the databases

from polaris.project.polaris import Polaris

project = Polaris.restore("/tmp/grid_from_git", "Grid", inplace=True, overwrite=True, scenario_name=None)